How to reduce your A/B testing tool's page speed impact

Client-side A/B testing tools get criticised for loading huge chunks of JS synchronously in the head (rightfully so). Despite the speed impact, these tools deliver far more value through the experiments they deliver. And luckily, we can help manage the problem in a few ways.

Here are ways we manage container weight at Mint Metrics when managing a client-side A/B testing tool for our clients:

Optimisations for all split testing tools

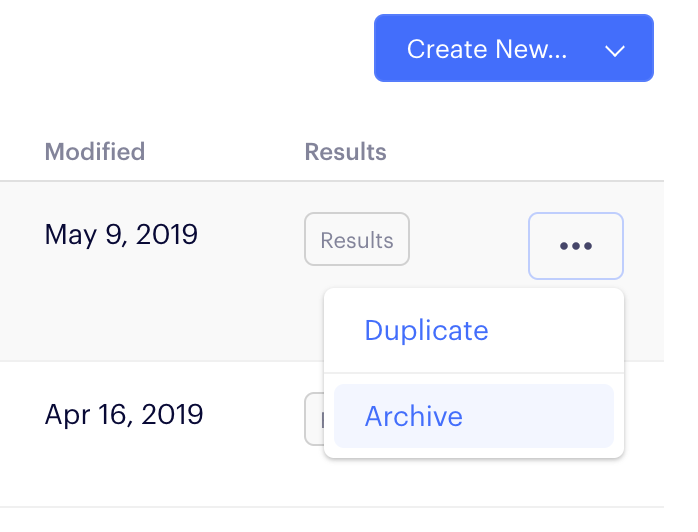

1. Archive unused experiments, triggers and features

All features and experiments inside your container take up space. Deleting them or archiving them for use later can help you save space and get back to the original, small container state.

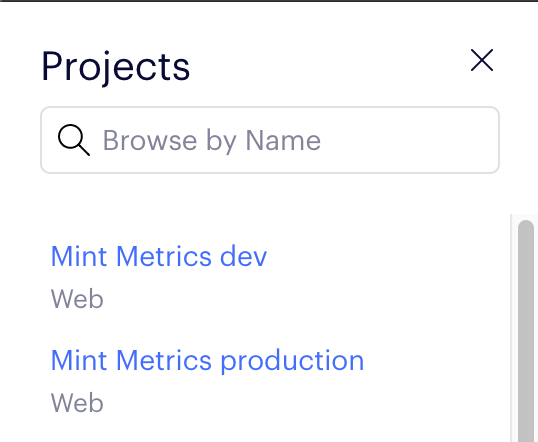

2. Build experiments in a separate (non-production) container

In many split testing tools, un-launched experiments and triggers (e.g. tests you're still building) are available within the container code. To keep production nice and fast, free from tests in development, just create a new container for dev:

Optimizely and other tools allow you to create many different environments for this purpose.

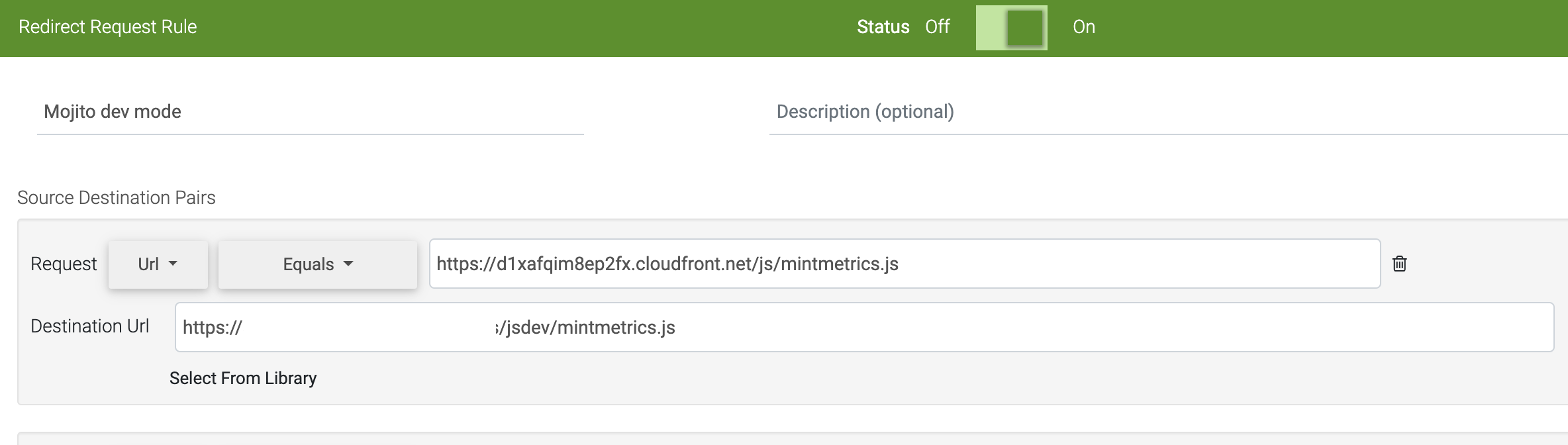

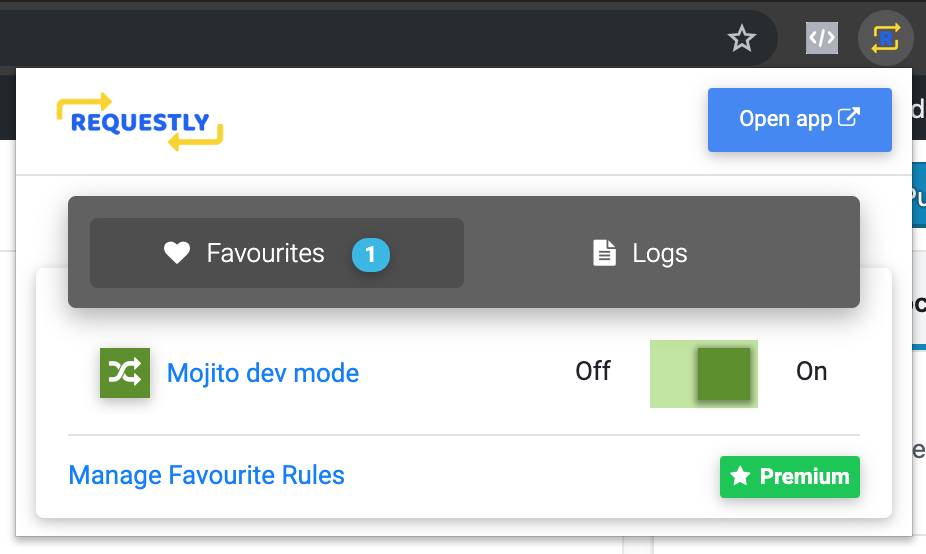

Obviously you'll need to change your snippet, right? Wrong ;) - you can use a tool like Requestly for Chrome to replace your production snippet for your development snippet. Just like a MITM attack you want to happen:

When you have the trigger set-up, you can toggle back and forth between dev mode and production.

3. Make use of existing libraries and tools for experiment builds

Complex experiments often rely on a lot of common helper functions. These may involve cookie manipulation, tricky DOM traversal without jQuery (e.g. insertBefore) or even the odd event that you can rely on instead of implementing a DOM mutation observer.

If possible, try removing the tracking library for the SaaS vendor and rely on a purpose built analytics library for your tracking.

4. Set cache-control headers on the container

Cache-control headers direct the browser to cache your container snippet for a specified time (among other directives). It's useful for priming caches between you and your users (when you specify public) as well as caching a copy on the user's local device - reducing latency and page weight on subsequent loads within the specified max age.

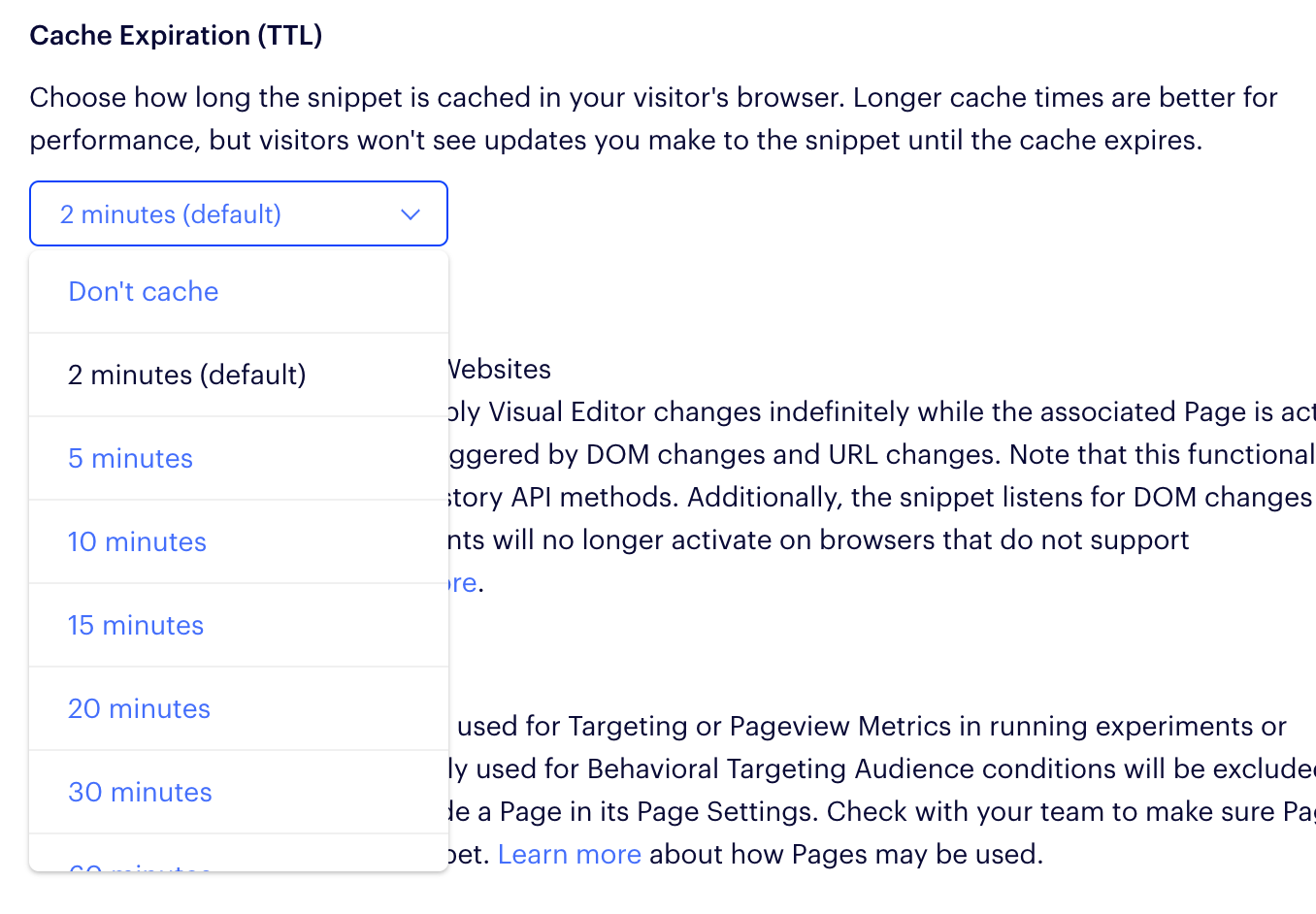

For Optimizely you have this option to cache the container for a short period, right inside the container settings:

The higher the TTL, the fewer times browsers will download a fresh copy of the container

The same goes for on-premise tools like Mojito. You simply need to set the HTTP Cache-Control header. Depending on how you host it and where you store your variant codes, you could set a really high max-age in seconds (we typically use 5 minutes for hosted containers at Mint Metrics):

cache-control: max-age=300,public

Most testing tools set very small max-age directives (or none at all) because they host experiments within the container.

Optimisations for hosted A/B testing tools like Optimizely & Convert.com

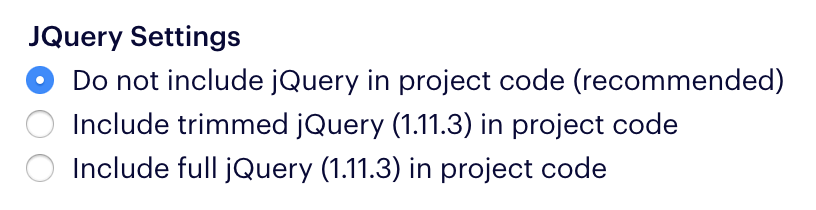

5. Remove jQuery as a dependency

You already likely have jQuery in your application and chances are your transformation probably can be written without jQuery in pure JS. Cut out literally 10s of kilobytes of JS.

6. Minify large items in the variant code (e.g. large JSON objects)

Tools like Optimizely and Convert.com don't always minify your code (not at all in Optimizely's case). This is the expected safe case considering you have no control over your hosted A/B testing tool minification process.

Instead, you have the opportunity to minify manually large variant code. Try out Uglify JS for minification online.

Optimisations for an on-premise, open-source A/B testing tool like Mojito

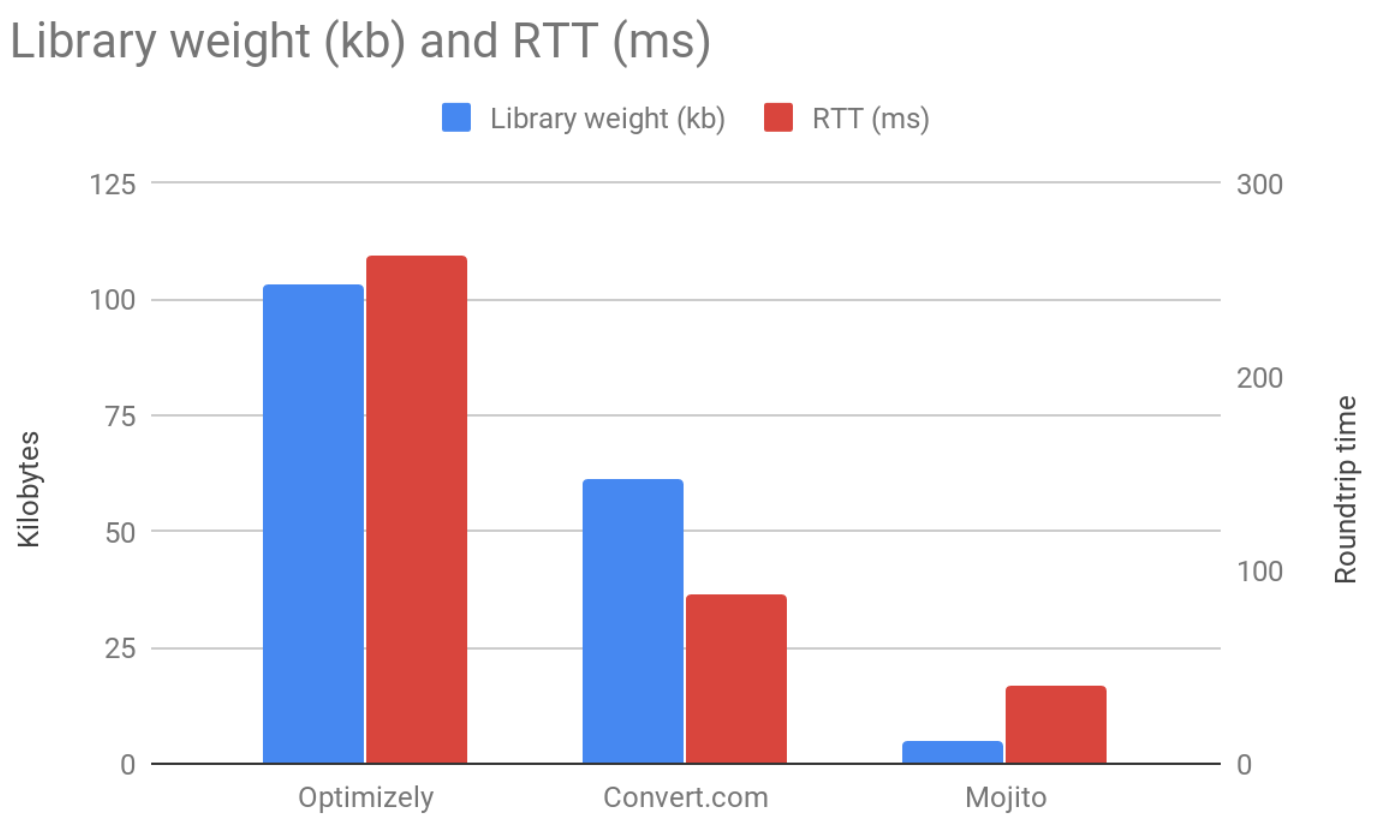

We think open-source, on-premise tools are the fastest because they're much smaller (from 5kb vs from 50kb for typical hosted solutions) and you have full control over what code goes into them.

7. Embed the library & experiments into your app and avoid extra HTTP requests

The Mojito split testing library core JS doesn't change once you set it up. You could embed the library into your core site scripts and use the same model for caching.