Partitioned ramps: A surprising feature of using hash functions for A/B split tests

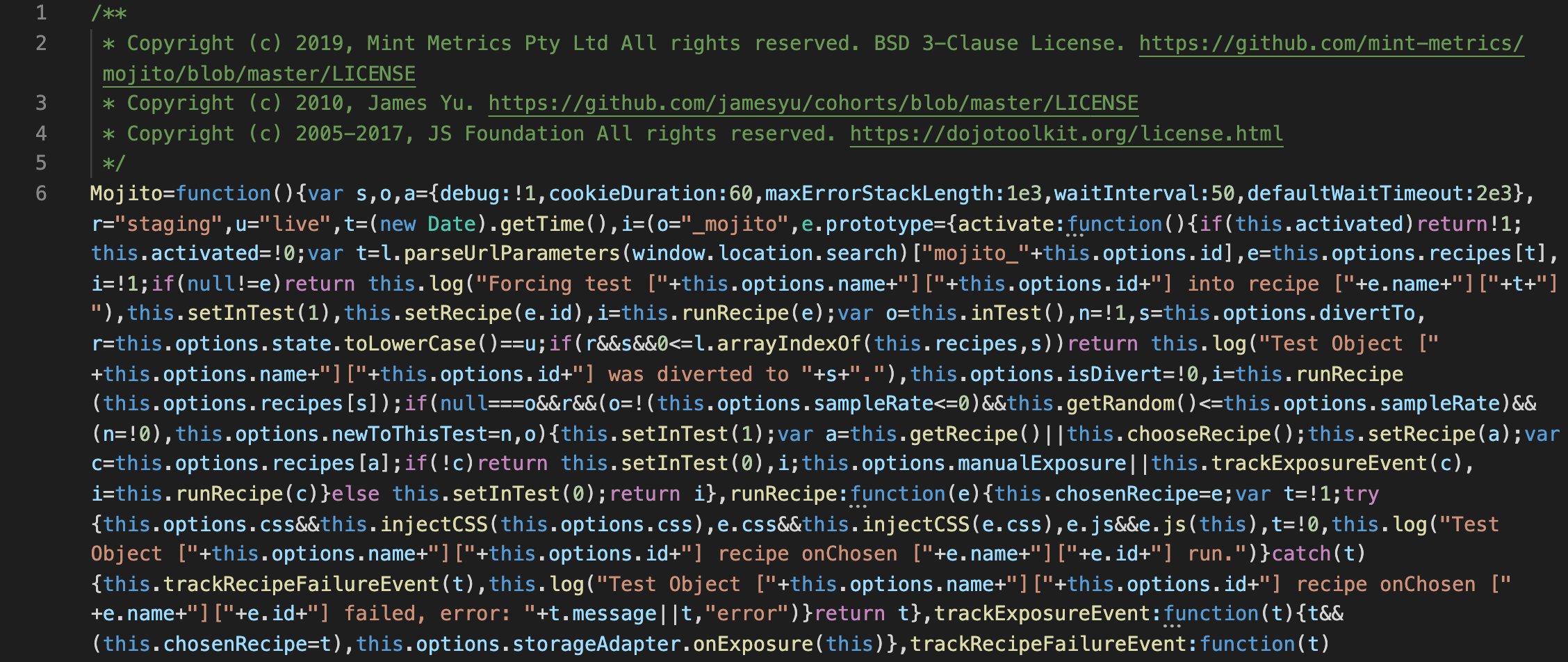

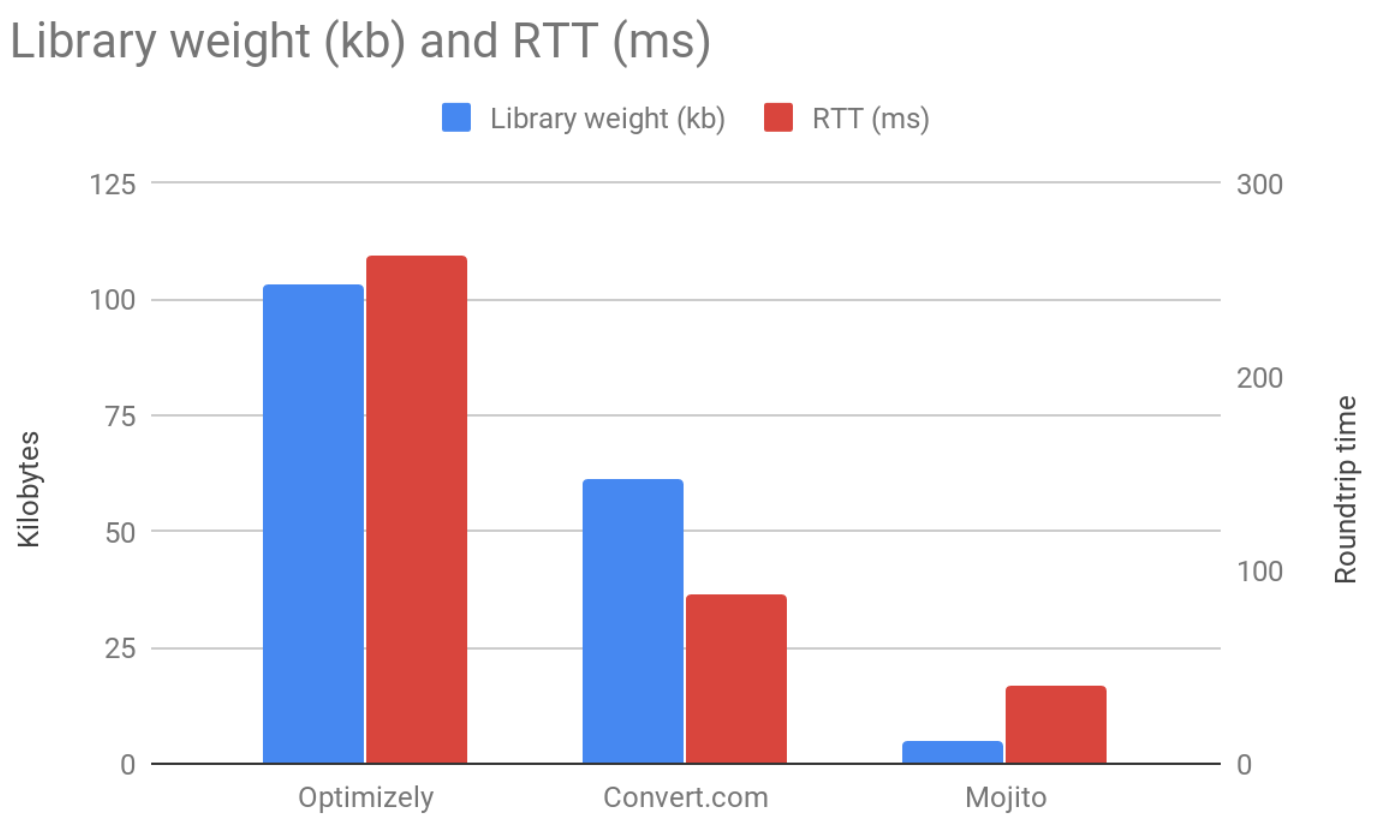

Whilst improving Mojito's PRNG & devising an ITP2.X workaround last year we introduced a modular splitting tool in Mojito that lets users split traffic with hash functions. We're amazed by the features that hash functions enable in split testing such as:

- Deterministic results: Users will always be bucketed the same way for a given input - regardless of when or where you're making the decision from (e.g. client-side/server-side/web/app)

- Sufficiently random: Random numbers are uniformly distributed despite near-identical inputs

But hiding in plain sight was a novel ramping process that Lukas Vermeer, Booking.com's Director of Experimentation, pointed out to us. We'd not encountered it before. But now that we were using hash functions, it was possible...