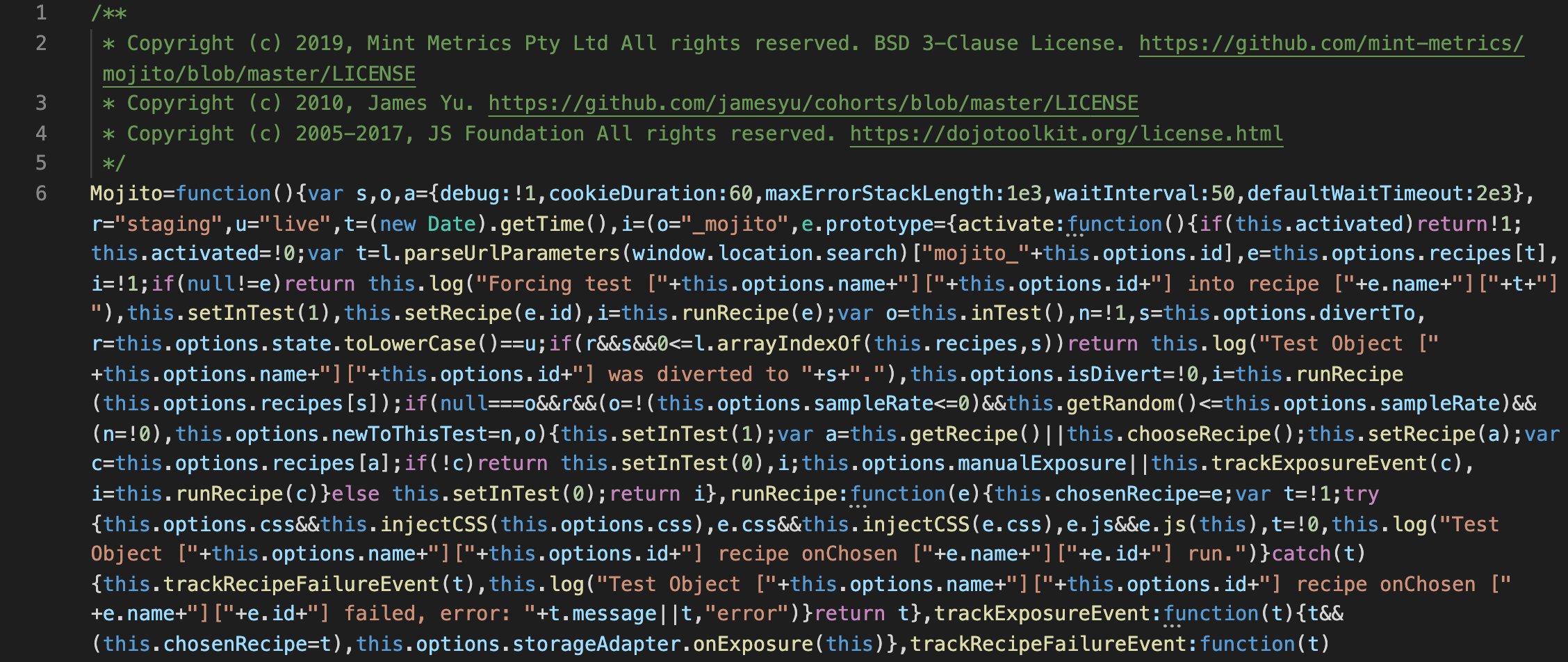

Using YoY/MoM conversion rate goals as targets can backfire

Image credit: Field & Stream

A common exercise product teams do at the end of each year is goal setting and revision. We often see conversion rate goals / objectives being set like:

Increase the conversion rate from 6.7% to 7.4%

When goals measure absolute conversion rates across date ranges, like the above, teams may end up working against each other.